SYNSCAPES

A photorealistic synthetic dataset for street scene parsing

| OVERVIEW | FEATURES | WHITE PAPER AND CODE | DOWNLOAD |

REALISM From sunlight to sensor

Synscapes is created with an end-to-end approach to realism, accurately capturing the effects of everything from illumination by sun and sky, to the scene's geometric and material composition, to the optics, sensor and processing of the camera system. Synscapes was created in collaboration between 7DLabs Inc., and researchers at Linköping University.

25,000 procedural & unique images

The images in the dataset do not follow a driven path through a single virtual world. Instead, an entirely unique scene was procedurally generated for each of the twenty-five thousand images. As a result, the dataset contains a wide range of variations and unique combinations of features.

physically based rendering, lights and materials

Synscapes was created using the same physically based rendering techniques that power high-end visual effects in the film industry. Unbiased path tracing tracks the propagation of light using radiometric properties from the sun and the sky, modeling its interaction with surfaces using physically based reflectance models, and ensuring that each image is representative of the real world.

Optical simulation

No optical system is perfect, and the effects of light scattering in a camera's lens can have a large impact on an image's appearance. In particular, when the sun is visible either directly in view, or through bright specular highlights, the image's contrast is significantly reduced. Synscapes models this effect using a long-tail point spread function (PSF).

sensor simulation and processing

As light strikes a digital sensor, photons are converted into current, and the signal is converted into an image. Synscapes models this process in detail, providing an accurate representation of the the following:

Motion blur is present in each image, both due to the speed of the ego vehicle where the camera is mounted, as well as that of surrounding vehicles.

Auto-exposure ensures that each image is well lit, but can also be "tricked" in high contrast scenes, much as a real sensor system could.

A 10 bit sensor is simulated, with physically plausible shot noise.

The simulated sensor output is produced by applying a camera response curve, whose result is subsequently quantized to 8 bit PNG-format images.

multi-dimensional distribution

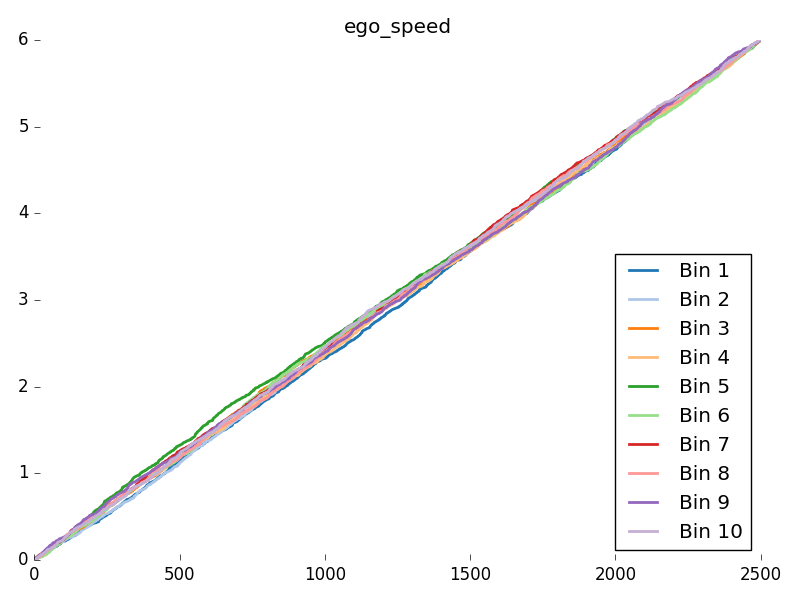

Synscapes was constructed in such a way that each parameter varies independently, providing a broad distribution across all dimensions of variation. As explored further in our white paper, this property allows for analysis of a neural network's performance by evaluating it selectively on different parts of the dataset, for example the 10% nearest to sunrise or the 5% with the narrowest sidewalk.

In the graph above, the dataset is segmented into 10 subsets according to the sun_height metadata property. Still, each subset exhibits a consistent distribution across all other dimensions, with ego_speed and sidewalk_width illustrated.

|

Synscapes 2020 |